It’s not uncommon to see people decrying a computer science degree as not effectively preparing students to be programmers. Of course, this misses the point that it’s not intended to do so; computer science is the study of computation, not the study of how to program. Indeed, the 2015 Stack Overflow Developer Survey found that only 75% of programmers responding said they studied computer science at a university, and many of those still described themselves self-taught.

And yet, every year another 40,000 students graduate with a bachelor’s degree in computer science, and many of them go on to work as programmers. If programming is so remote from computer science, why do so many people who want to program start with a computer science degree?

Before I try to answer that question, I should mention my background. I have bachelor’s, master’s, and PhD degrees in computer science and have worked as a software developer for the last five years. At the same time, I would also describe myself as self-taught, because while I had a couple of programming classes in college, for the most part we were just expected to pick up languages as we needed them. The focus was on what we could do with computers, rather than the mechanics of any particular language.

Why people get a computer science degree when they want to program

I think there are three main reasons why people study computer science.

The first group is people who just like computers and want to learn more about them; I fall into this group. Since we’re into computers, computer science is the natural degree path to take. Once we graduate, programming is one of the most obvious computer-related career paths to follow.

The second group is people who enjoy programming, figure they need a college degree, and settle on computer science as the most relevant degree. This doesn’t make it the wrong option; most people benefit from getting a well-rounded liberal arts education offered by a bachelor’s degree. However, there are also a number of people who really only want to learn about programming, and would most likely be happier in a certification program from a technical college that is entirely programming focused. Lately we, as a society, have started to think that everyone needs a four-year college degree, and that’s really not true; for people who know that they want to pursue a trade, going to trade school for two years can be a much more financially sensible decision.

The third group is people who decided to get a degree in computers because they heard that it guarantees them a lot of money. Hint: it doesn’t. People in that group are most likely not reading this blog.

What a computer science degree does for you

So, given that computer science classes don’t teach you to program (at least, not past a very basic level) and getting a four-year computer science degree is so much more expensive than attending a technical college or code camp, why do people – at least, those who want to be programmers and know about the other alternatives – do it?

One reason, of course, is that it expands your options. Having the degree both means that you’re qualified to do more different types of work, and makes it easier to get in the door with employers who will only consider people with degrees. It’s been said that the bachelor’s degree is the new high school diploma: it shows employers that you have a basic level of education.

But suppose you know you’re only interested in programming, and further, you’re confident you can get a programming job with or without a degree. Is it still worth it?

How a computer science degree makes you a better programmer

Again, let’s start with the basic assumption that you are, for the most part, going to teach yourself how to code. Getting the degree can still help you in two areas: getting a job and being better at it.

Getting the job

Being enrolled in a computer science program opens up more opportunities. It’s easier to get an internship (which, for programmers, can actually pay pretty well), which can essentially be an extended job interview; it lets you see if you’re interested in a job, while letting the company determine whether they’d like to keep you around without the overhead of hiring you as a full-time employee. I never did an internship while I was in college, because I was a math tutor at the time, and ended up regretting it as it’s the easiest way to get experience.

Many companies also recruit for their full-time positions as selected universities – they have a relationship with schools that tend to send them good programmers and so make a habit of looking for new employees there. I’d never even heard of the company I now work for until they took my resume at the job fair for computer science students at my school.

Doing the job

Ok, so now you have the job. What next?

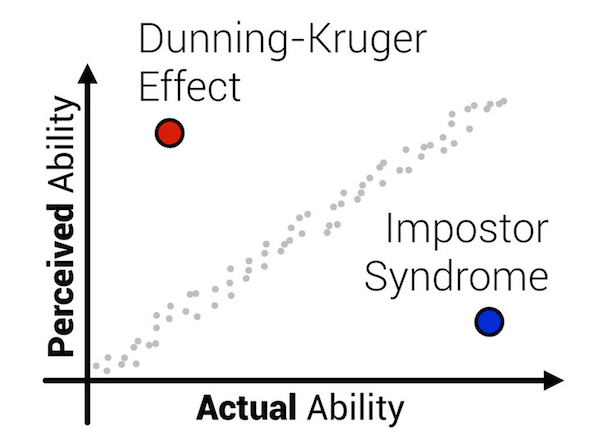

We’ll assume that you have decent programming skills, and you’re keeping them up to date. Many programmers like it so much, they write code in their free time as well. That still leaves the question: what kind of programmer are you doing to be?

Are you going to be the type of programmer who’s only interested in writing code? Someone else determines what code needs to be written, and you implement it. Let’s be honest here – if you’re this type of programmer, who just totally loves to code, you’re probably going to do a better job in this kind of position than anyone else. For you, the degree may be redundant.

On the other hand, what if you’re interested in taking on a larger role? For lack of better terms, I use the word ‘programmer’ to mean someone who primarily writes code, and the phrase ‘software developer’ for someone involved in all aspects of, well, software development, including programming.

Being a software developer

As a software developer, my job involves writing code. It also involves testing other people’s code, creating designs for the code I’m going to write, critiquing other developers’ designs, and writing documentation explaining what the code does, how it should be tested, and how it should be explained to the end users. Programming is a large part of my job – maybe the most important part – but it’s not the only part, nor does it even occupy the majority of my time.

So how does having a well-rounded education help here? You need to be able to:

- Analyze requirements to determine what actually needs to be built

- Write clear documents that effectively communicate the intent of your code

- When the efficiency of the code matters, determine what algorithms will work best given your unique conditions

- Ensure that the resulting product is usable by people who are not experts on (or familiar with) your code

Some of these things – particularly algorithms – are taught in computer science classes. Others are general education requirements that you’ll have to satisfy to get the degree, even though they’re not computer-related; things like English Composition. I’ve seen more than one person complain about their college forcing them to take classes on subjects like English that they’re not interested in, but they actually are important to being a software developer. English classes teach you to communicate. Math classes, well, let’s just say I probably wouldn’t be too trusting of any program written by someone who can’t handle college math; computer science is essentially an extension of mathematics.

Always be learning

Face it, though: in ten years, you’re not going to remember most of what you learned in college. What you will remember, hopefully, is how to learn. As a full-time college student you get a lot of information thrown at you, and you have to be able to pick up the basics of multiple different subjects quickly in order to succeed. Which actually sounds a lot like a job working with computers, where you have to be constantly learning about many different technologies.

Now, about those student loans…